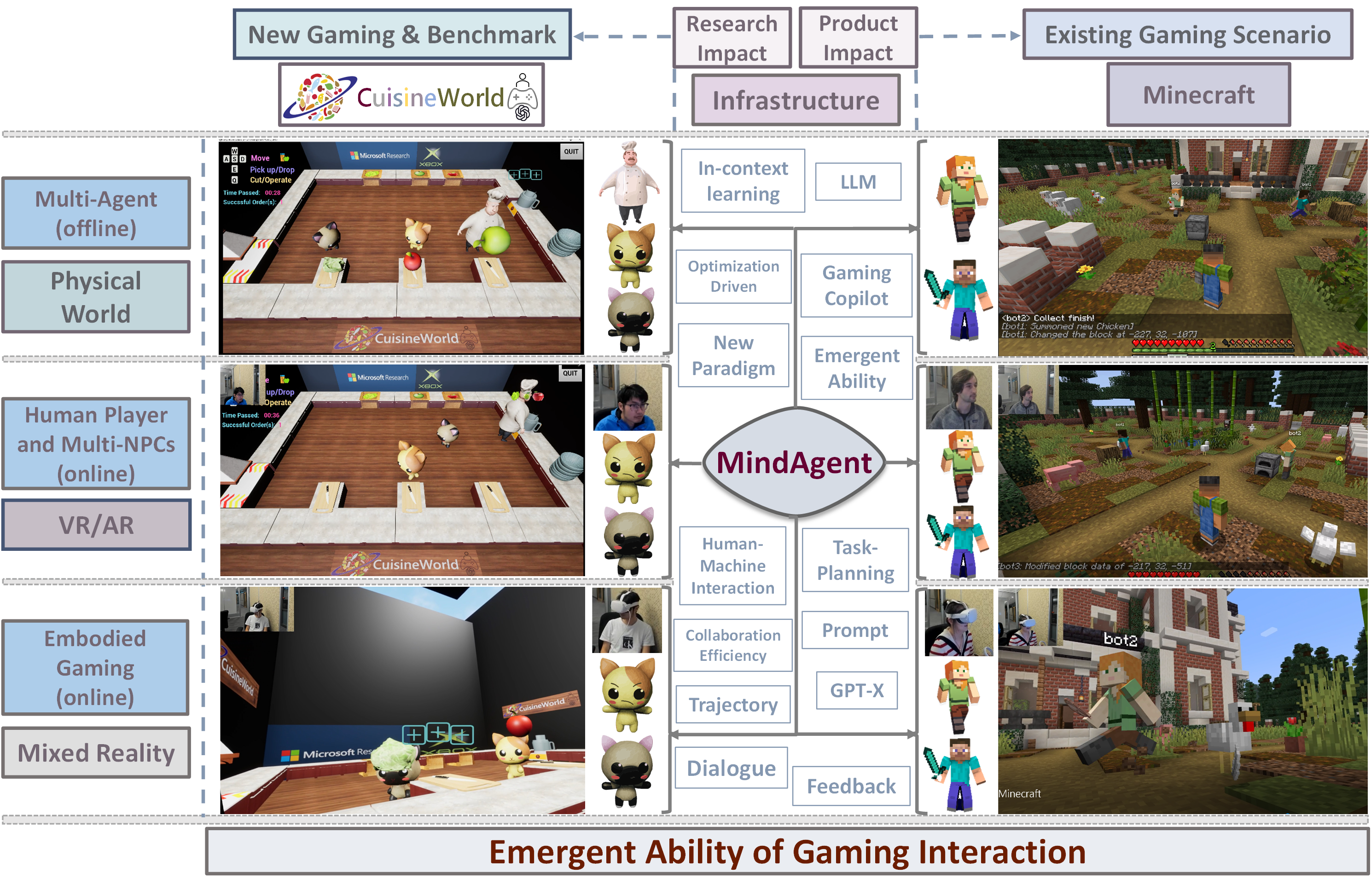

We present MindAgent , an infrastructure for emergent gaming interaction. It enables multi NPC collaboration and human NPC collaboration.

Large Language Models (LLMs) have the capacity of performing complex scheduling in a multi-agent system and can coordinate these agents into completing sophisticated tasks that require extensive collaboration. However, despite the introduction of numerous gaming frameworks, the community has insufficient benchmarks towards building general multi-agents collaboration infrastructure that encompass both LLM and human-NPCs collaborations. In this work, we propose a novel infrastructure - MindAgent - to evaluate planning and coordination emergent capabilities for gaming interaction. In particular, our infrastructure leverages existing gaming framework, to i) require understanding of the coordinator for a multi-agent system, ii) collaborate with human players via un-finetuned proper instructions, and iii) establish an in-context learning on few-shot prompt with feedback. Furthermore, we introduce CUISINEWORLD, a new gaming scenario and related benchmark that dispatch a multi-agent collaboration efficiency and supervise multiple agents playing the game simultaneously. We conduct comprehensive evaluations with new auto-metric collaboration score CoS for calculating the collaboration efficiency. Finally, our infrastructure can be deployed into real-world gaming scenarios in a customized VR version of CUISINEWORLD and adapted in existing broader “Minecraft” gaming domain. We hope our findings on LLMs and the new infrastructure for general-purpose scheduling and coordination can help shed light on how such skills can be obtained by learning from large language corpora.

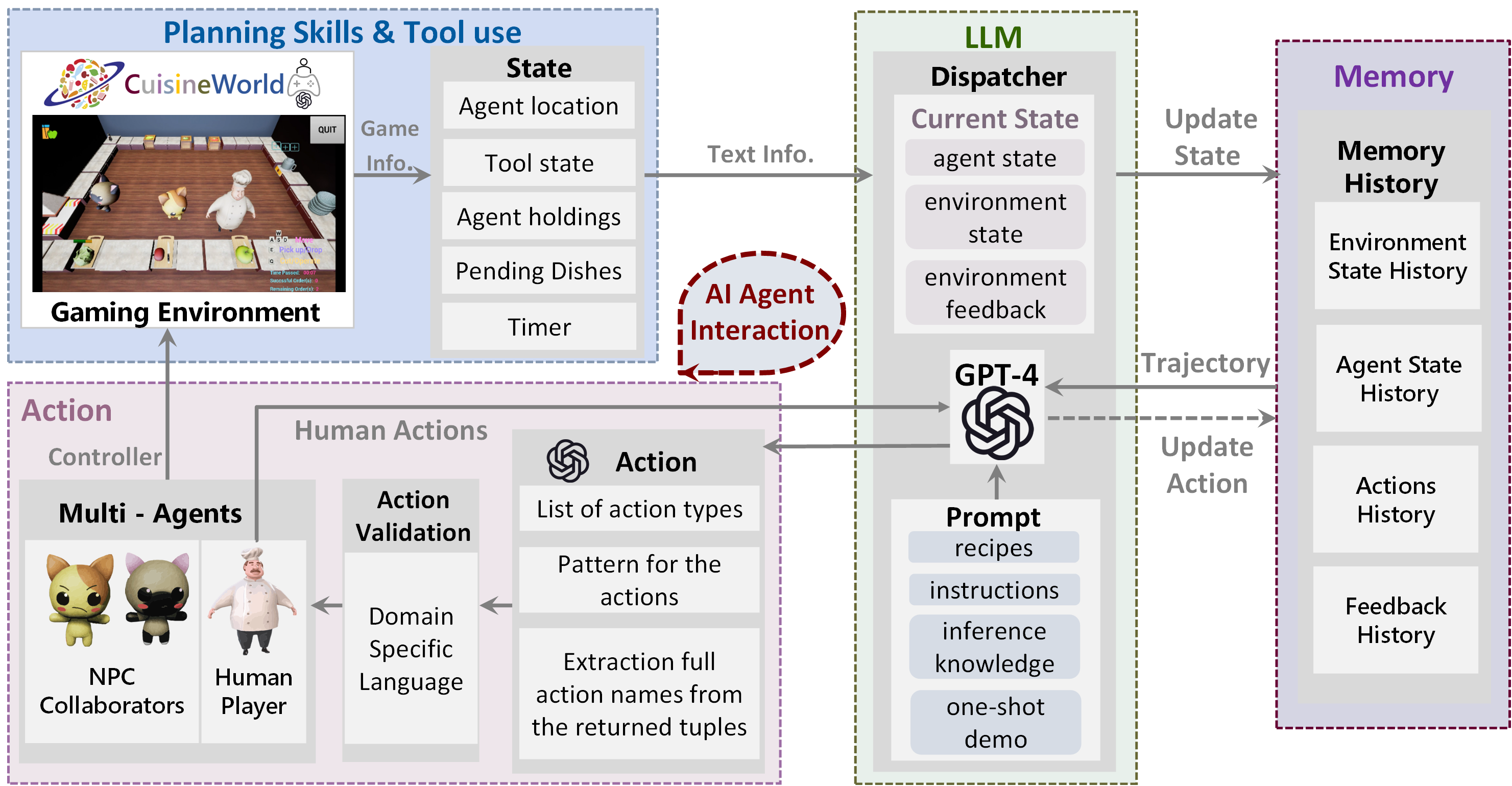

Our overview of our MindAgent architecture. Planning Skill and Tool Use: The game environment requires diverse planning skills and tool use to complete tasks. It emits related game information. This module also converts relevant game data into a structured text format so the LLMs can process it. LLM : The main workhorse of our infrastructure makes decisions, which is a dispatcher for the multi-agent system. Memory History : A storage utility that stores relevant information. Action Module , extract actions from text inputs and convert them into domain-specific language. Validate DSLs so they don't cause errors when executing.